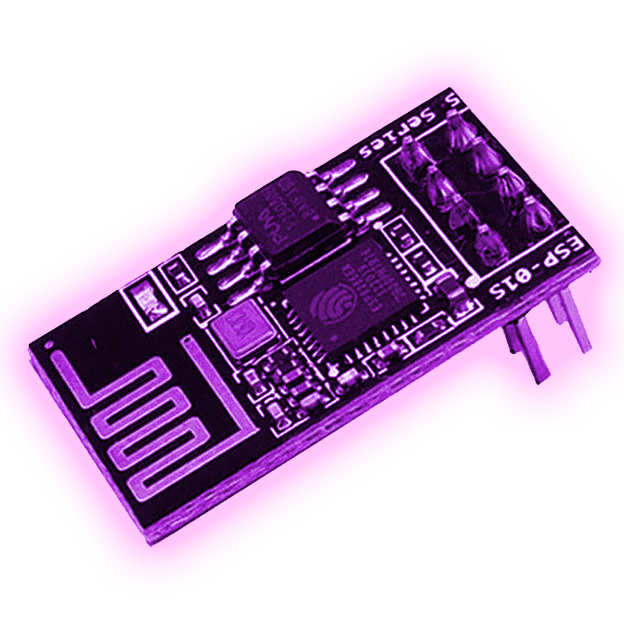

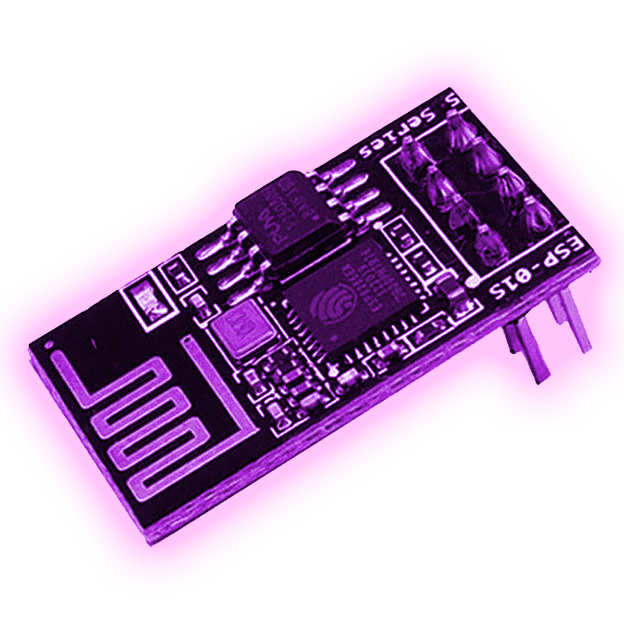

I’ve been looking at esp32’s with wired connectivity lately. POE would be a nice touch.

I’ve been looking at esp32’s with wired connectivity lately. POE would be a nice touch.

This looks like it’s just sponsor blocking in the videos. Does this block pre/post ads?

My setup is similar. My main “desktop” is a Slackware VM through VNC/guacamole.

I’d suggest Alpine too. Works great for me so far.

Correct. SearxNG is very much still active. Check the GitHub page or matrix/IRC.

Cloudflare zero trust tunnel might be up your alley. Look into that. It’s free but has privacy concerns so do your homework.

Forgive my stupidity, but couldn’t you just use split-horizon DNS and have your internal DNS resolve to your homelab instead of the VPS? Personally, that’s what I’ve done. So external lookups for sub.domain.tld go one way and internal lookups go to 10.10.10.x.

So, docker networking uses it’s own internal DNS. Keep that in mind. You can create (and should) docker networks for your containers. My personal design is to have only nginx exposing port 443 and have it proxy for all the other containers inside those docker networks. I don’t have to expose anything. I also find nginx proper to be much easier to deal with than using NPM or traefik or caddy.

Why did you register two separate domains instead of using a wildcard cert from LE and just using subdomains?

Sure did. I totally tried recording sounds of the coins dropping in. Never worked but I was too young to know why.

I’ll have to check this out. Have you run this in a container or just a native app?

Kind of. I’m thinking something along the lines of sonarr/radarr/etc but with the ability to play/stream the podcast instead of downloading it. I tend to use web interfaces of stuff like that at work and can’t really use my phone. Maybe I’ll have to look into a roll-your-own solution using some existing stuff. Was hoping I wouldn’t have to.

links is pretty lightweight. All joking aside, I’d look at adding RAM to it if possible. That’s probably going to help the most.

Also, to add to this: you’re setup sounds almost identical to mine. I have a NAS with multiple TBs of storage and another machine with plenty of CPU and RAM. Using NFS for your docker share is going to be a pain. I “fixed” my pains by also using shares inside my docker-compose files. What I mean by that is specify your share in a volume section:

volumes:

media:

driver: local

driver_opts:

type: "nfs"

o: "addr=192.168.0.0,ro"

device: ":/mnt/zraid_default/media"

Then mount that volume when the container comes up:

services:

...

volumes:

- type: volume

source: media

target: /data

volume:

nocopy: true

This way, I don’t have to worry as much. I also use local directories for storing all my container info. e.g.: ./container-data:/path/in/container

Basically when you make a new group or user, make sure that the NUMBER that it’s using matches whatever you’re using on your export. So for example: if you use groupadd -g 5000 nfsusers just make sure that whenever you make your share on your NAS, you use GID of 5000 no matter what you actually name it. Personally, I make sure the names and GIDs/UIDs are the same across systems for ease of use.

I’m 100% sure that your problem is permissions. You need to make sure the permissions match. Personally, I created a group specifically for my NFS shares then when I export them they are mapped to the group. You don’t have to do this, you can use your normal users, you just have to make sure the UID/GID numbers match. They can be named different as long as the numbers match up.

Goddamnit, I didn’t even think about this when I saw they were doing the mass delete. Here’s to hoping that they’ll at least keep the videos up. Waaaay too much stuff on YT to lose it all. Anyone know if archive.org is backing them up?