You want someone else to pay for the disk space and network availability for your videos?

You want someone else to pay for the disk space and network availability for your videos?

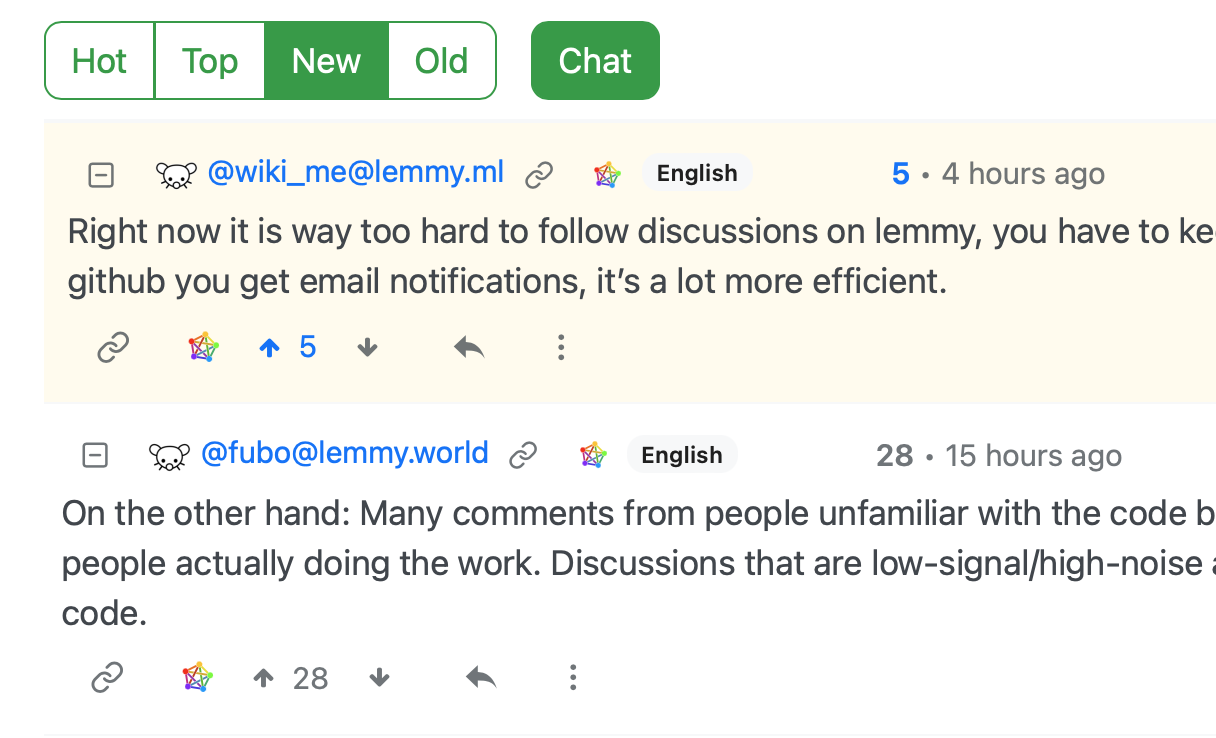

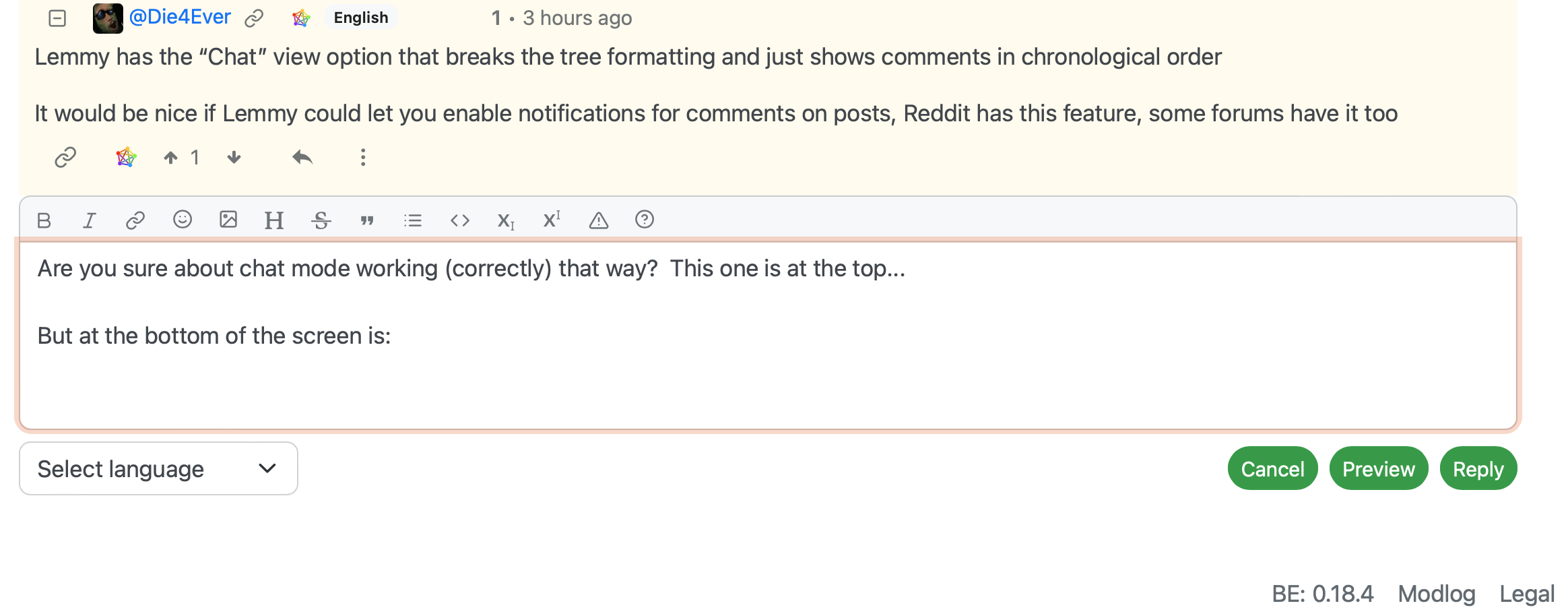

Are you sure about chat mode working (correctly) that way? This one is at the top…

But at the bottom of the screen is:

This doesn’t appear to be in chronological order.

No.

The nature of the checksums and perceptual hashing is kept in confidence between the National Center for Missing and Exploited Children (NCMEC) and the provider. If the “is this classified as CSAM?” service was available as an open source project those attempting to circumvent the tool would be able to test it until the modifications were sufficient to get a false negative.

There are attempts to do “scan and delete” but this may add legal jeopardy to server admins even more than not scanning as server admins are required by law to report and preserve the images and log files associated with CSAM.

I’d strongly suggest anyone hosting a Lemmy instance to read https://www.eff.org/deeplinks/2022/12/user-generated-content-and-fediverse-legal-primer

The requirements for hosting providers are https://www.law.cornell.edu/uscode/text/18/2258A

(a) Duty To Report.—

(1) In general.—

(A) Duty.—In order to reduce the proliferation of online child sexual exploitation and to prevent the online sexual exploitation of children, a provider—

(i) shall, as soon as reasonably possible after obtaining actual knowledge of any facts or circumstances described in paragraph (2)(A), take the actions described in subparagraph (B); and

(ii) may, after obtaining actual knowledge of any facts or circumstances described in paragraph (2)(B), take the actions described in subparagraph (B).

(B) Actions described.—The actions described in this subparagraph are—

(i) providing to the CyberTipline of NCMEC, or any successor to the CyberTipline operated by NCMEC, the mailing address, telephone number, facsimile number, electronic mailing address of, and individual point of contact for, such provider; and

(ii) making a report of such facts or circumstances to the CyberTipline, or any successor to the CyberTipline operated by NCMEC.

…

(e) Failure To Report.—A provider that knowingly and willfully fails to make a report required under subsection (a)(1) shall be fined—

(1) in the case of an initial knowing and willful failure to make a report, not more than $150,000; and

(2) in the case of any second or subsequent knowing and willful failure to make a report, not more than $300,000.

Child Sexual Abuse Material.

Here’s a safe for work blog post by Cloudflare for how to set up a scanner: https://developers.cloudflare.com/cache/reference/csam-scanning/ and a similar tool from Google https://protectingchildren.google

Reddit uses a CSAM scanning tool to identify and block the content before it hits the site.

https://protectingchildren.google/#introduction is the one Reddit uses.

https://blog.cloudflare.com/the-csam-scanning-tool/ is another such tool.

If you haven’t created an account, subscribed isn’t available.

For selecting which instance you should join, would “local” or “all” be a better choice for the front page for a new user unfamiliar with Lemmy?

If you have whitelisted *.mobile.att.net you’ve whitelisted a significant portion of the mobile devices in the US with no ability to say “someone in Chicago is posting problematic content”.

You’ve whitelisted 4.6 million IPv4 addresses and 7.35 x 1028 IPv6 addresses.

Why have a whitelist at all then?

Would you be able to post an image if neither *.res.provider.com nor *.mobile.att.com were whitelisted and putting 10-11-23-45.res.provider.com (and whatever it will be tomorrow) was considered to be too onerous to put in the whitelist each time your address changed?

If you’re whitelisting *.res.provider.com and *.mobile.att.com the whitelist is rather meaningless because you’ve whitelisted almost everything.

If you are not going to whitelist those, do you have any systems available to you (because I don’t) that would pass a theoretical whitelist that you set up?

Do you have a good and reasonable reverse DNS entry for the device you’re writing this from?

FWIW, my home network comes nat’ed out as {ip-addr}.res.provider.com.

Under your approach, I wouldn’t have any system that I’d be able to upload a photo from.

Try turning wifi off on your phone, getting the IP address, and then looking up the DNS entry for that and consider if you want to whitelist that? And then do this again tomorrow and check to see if it has a different value.

Once you get to the point of “whitelist everything in *.mobile.att.net” it becomes pointless to maintain that as a whitelist.

Likewise *.dhcp.dorm.college.edu is not useful to whitelist.

(h) Preservation.-

(1) In general.-For the purposes of this section, a completed submission by a provider of a report to the CyberTipline under subsection (a)(1) shall be treated as a request to preserve the contents provided in the report for 90 days after the submission to the CyberTipline.

(2) Preservation of commingled content.-Pursuant to paragraph (1), a provider shall preserve any visual depictions, data, or other digital files that are reasonably accessible and may provide context or additional information about the reported material or person.

(3) Protection of preserved materials.-A provider preserving materials under this section shall maintain the materials in a secure location and take appropriate steps to limit access by agents or employees of the service to the materials to that access necessary to comply with the requirements of this subsection.

(4) Authorities and duties not affected.-Nothing in this section shall be construed as replacing, amending, or otherwise interfering with the authorities and duties under section 2703.

I would also suggest reading User Generated Content and the Fediverse: A Legal Primer – https://www.eff.org/deeplinks/2022/12/user-generated-content-and-fediverse-legal-primer

Make sure to follow its advice - it isn’t automatic and you will need to take affirmative steps.

The safe harbor doesn’t apply automatically. First, the safe harbor is subject to two disqualifiers: (1) actual or “red flag” knowledge of specific infringement; and (2) profiting from infringing activity if you have the right and ability to control it. The standards for these categories are contested; if you are concerned about them, you may wish to consult a lawyer.

Second, a provider must take some affirmative steps to qualify:

Designate a DMCA agent with the Copyright Office.

This may be the best $6 you ever spend. A DMCA agent serves as an official contact for receiving copyright complaints, following the process discussed below. Note that your registration must be renewed every three years and if you fail to register an agent you may lose the safe harbor protections. You must also make the agent’s contact information available on your website, such as a link to publicly-viewable page that describes your instance and policies.

Have a clear DMCA policy, including a repeat infringer policy, and follow it.

To qualify for the safe harbors, all service providers must “adopt and reasonably implement, and inform subscribers and account holders of . . . a policy that provides for the termination in appropriate circumstances of . . . repeat infringers.” There’s no standard definition for “repeat infringer” but some services have adopted a “three strikes” policy, meaning they will terminate an account after three unchallenged claims of infringement. Given that copyright is often abused to take down lawful speech, you may want to consider a more flexible approach that gives users ample opportunity to appeal prior to termination. Courts that have examined what constitutes “reasonable implementation” of a termination process have stressed that service providers need not shoulder the burden of policing infringement.

…

And further down:

Service providers are required to report any CSAM on their servers to the CyberTipline operated by the National Center for Missing and Exploited Children (NCMEC), a private, nonprofit organization established by the U.S. Congress, and can be criminally prosecuted for knowingly facilitating its distribution. NCMEC shares those reports with law enforcement. However, you are not required to affirmatively monitor your instance for CSAM.

The devs (nutomic and dessalines) and the instances that they are involved with hosting and upkeep (lemmy.ml and lemmygrad.ml).

It also lacks any of the fraud prevention and detection measures that retailers need.

Providing just a searchable marketplace that then dispatches you to buy from the store where you have to enter your payment information for them? Sure. And I’d use it. But then, that’s what the ‘shopping’ item on Google is.

If you then say “but I’ve got to enter my payment and shipping info with the store again” - is that as much of a problem as you make it out to be? Because they do need it to do the payment processing and shipping. Fortunately my browser knows who I am and so it auto fills much of that information.

The payment processing is a major hurdle. You’re dealing with each company and each company has its relationship with the payment processor. They have different rates depending on how trustworthy they are and how much business they do. The less trust in the system, the more it costs. The smaller the volume, the more it costs again.

The companies that leak credit card data? They’re gonna pay with increased payment processor costs. You may not see it, but behind the scenes you’ve got https://www.commerce.uwo.ca/pdf/PCI-DSS-v4_0.pdf (that’s a 350 page checklist for the standards for handling payment cards).

Assume that we’ve handled payment… logistics. Amazon has their enormous warehouses and automation that they’ve invested in (the robots are originally from Kiva - https://www.fromscratchradio.org/show/mick-mountz ). Having everything in one place and then dispatching works well and saves money. If I buy something from Best Buy and something else from Pottery Barn and something else from Williams Sonoma – they don’t share a warehouse and so they’re each doing their own shipping with whoever they’ve contracted to do shipping.

Amazon again got big enough that they’ve got their own last mile shipping (and since they’ve got coverage with distribution centers it makes it even more efficient). Shipping from DC to DC is cheap - it’s the last mile that has the expenses.

So, its cheaper (and more carbon / energy efficient) for someone to buy from Amazon and get one package from a marketplace where Amazon manages the payment, inventory, and logistics for delivery than it is to have it be managed from multiple vendors each with their own payment, inventory, and logistics.

Presenting the marketplace is the ‘easy’ part of this. Payment, inventory, and logistics are hard and aren’t solved by federation but rather made more complex and worse from the standpoint of the consumer.

New accounts are being created.

How many of those new accounts are people shuffling between different identities and interests? This account is for the music stuff I follow, this is my meme account, this is my serious stuff account…

How much of that is also the people leaving lemmy.world when they were having stability problems?

curl https://literature.cafe/post/641633

The text ‘A large issue’ appears multiple times in the payload (frankly, surprisingly many).

Inspecting it with xxd:

00004530: 6f6e 652e 295c 6e5c 6e41 206c 6172 6765 one.)\n\nA large

Further down in the output (and the only case of this happening):

000487a0: 6c6f 6e65 2e29 2041 206c 6172 6765 2069 lone.) A large i

In this instance, The A (character 41 in hex) is preceded by 20 which is a space.

The number of times it shows up:

% xxd post.txt| grep 'A large' | wc -l

27

It’s possible that your reader is using the wrong instance of the text… but also a “why are there that many copies?!”

If the appearance of some social media is “there is reasonable discussion but as soon as something shows up that’s awful, discussion stops there, but it remains up for all to see” - that’s going to have difficulty brining in new people.

Instance blocking but still giving the appearance of condoning the content is going to lead to what appears to be toxic spaces to everyone who hasn’t taken the time to carefully cultivate their block lists.

Ignoring and personally blocking users and instances means that admins and moderators won’t have as good of a view of the health of the communities when everything ends with a troll comment that no one but new users see.